Biography

Rami Al-Rfou is a Senior Staff Research Scientist at Waymo Research. He leads a team to build foundational models for motion and driving based on his expertise in large language models.

Previously, Rami was a technical lead for assisted writing applications such as SmartReply at Google Research. His research focused on improving pretraining large language modeling through token-free architectures, synthetic datasets constructed with knowledge-base based generative models, and improved sampling strategies for multilingual datasets. These pretrained language models, trained on +100 languages, are being utilized in query understanding, web page understanding, semantic search, and response ranking in conversations.

Al-Rfou’s research goes beyond language into designing better architecture to understand large-scale data such as graphs. Al-Rfou repurposes language modeling tools to produce novel graph learning algorithms that measure node and graph similarities. These modeling ideas have been deployed for spam detection and personalization application on large scale.

Al-Rfou received his PhD in Computer Science at Stony Brook University under the supervision of Prof. Steven Skiena in 2015. He investigated how to utilize deep learning representations to build truly massive multilingual NLP pipeline that supports +100 languages. Massively multilingual modeling significantly gained momentum in the recent years since then. Al-Rfou’s experience in sequential modeling and crosslingual applications span 10 years of academic and industrial research with applications that touched the lives of millions of users and open sourced code that helped thousands of students.

Experience

Senior Staff Research Scientist

Waymo Research

Responsibilities include:

- Foundational Motion Models TLM

Staff Research Scientist

Google Research

Responsibilities include:

- SmartReply Technical Lead

- Deep Retrieval Research Lead

Research Intern

Microsoft Research

“Investigated new ways to improve semi-supervised learning with word embeddings.”

Research Intern

Google Research

“Developed a language-independent, semi-supervised method for multilingual coreference resolution utilizing word emebddings and finetuned dual-encoder ranking model.”

Software Engineer Intern

“Developed a visualization system for Google’s data centers' internal networks.”

Education

PhD in Natural Language Processing

Stony Brook University

Committee: Yejin Choi, Leman Akoglu, Leon Bottou

BSc. in Computer Engineering

University of Jordan

GPA: 3.79/4.0

Talks

Projects

Featured Publications

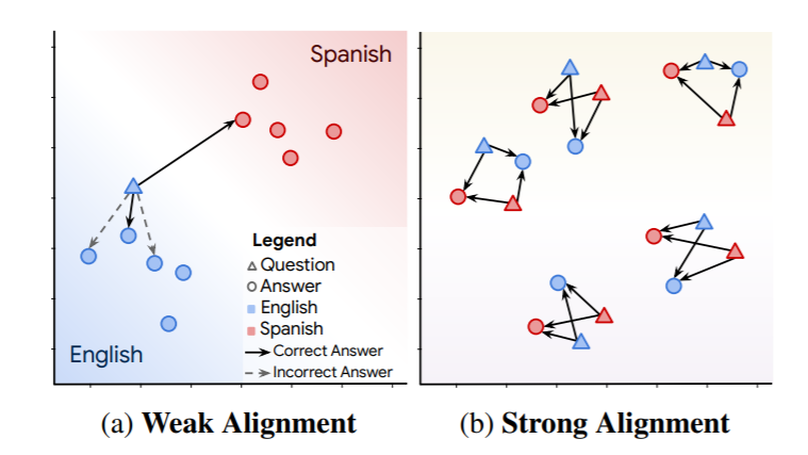

LAReQA: Language-agnostic answer retrieval from a multilingual pool

We present LAReQA, a challenging new benchmark for language-agnostic answer retrieval from a multilingual candidate pool. Unlike previous cross-lingual tasks, LAReQA tests for “strong” cross-lingual alignment, requiring semantically related cross-language pairs to be closer in representation space than unrelated same-language pairs. This level of alignment is important for the practical task of cross-lingual information retrieval. Building on multilingual BERT (mBERT), we study different strategies for achieving strong alignment. We find that augmenting training data via machine translation is effective, and improves significantly over using mBERT outof-the-box. Interestingly, model performance on zero-shot variants of our task that only target “weak” alignment is not predictive of performance on LAReQA. This finding underscores our claim that language-agnostic retrieval is a substantively new kind of crosslingual evaluation, and suggests that measuring both weak and strong alignment will be important for improving cross-lingual systems going forward. We release our dataset and evaluation code at https://github.com/google-research-datasets/lareqa

Recent Publications

Patents

Systems and Methods for Determining Graph Similarity

US Patent Application US16/850,570Selective text prediction for electronic messaging

US Patent Application US15/852,916Cooperatively training and/or using separate input and subsequent content neural networks for information retrieval

US Patent Application US15/476,280Cooperatively training and/or using separate input and response neural network models for determining response(s) for electronic communications

US Patent Application US15/476,292Iteratively learning coreference embeddings of noun phrases using feature representations that include distributed word representations of the noun phrases

Issued Oct 02, 2017 US 9514098 B1

Contact

- 631 371 3165

- 701 N Rengstorff Avenue, Apt 19, Mountain View, CA 94043

- Book an appointment

- DM Me